The Path to Enterprise AI: Ambition Meets Reality

Most enterprises experimenting with AI today are following a familiar path. They begin with a bold vision - transforming efficiency, reducing costs, or creating new customer experiences.

These initiatives typically aim to achieve one or more of the following:

- Generating more revenues

- Improving efficiency and reducing operational costs

- Reducing risks

To give a few examples:

- A global bank piloting AI front-desk agents to accelerate onboarding and reduce customer drop-off.

- A healthcare provider exploring automated prescription generation to support doctors with routine cases.

- A retail chain using AI demand forecasting to prevent empty shelves and reduce overstock.

- An insurance company deploying AI-based claims automation to cut processing times and detect fraud faster.

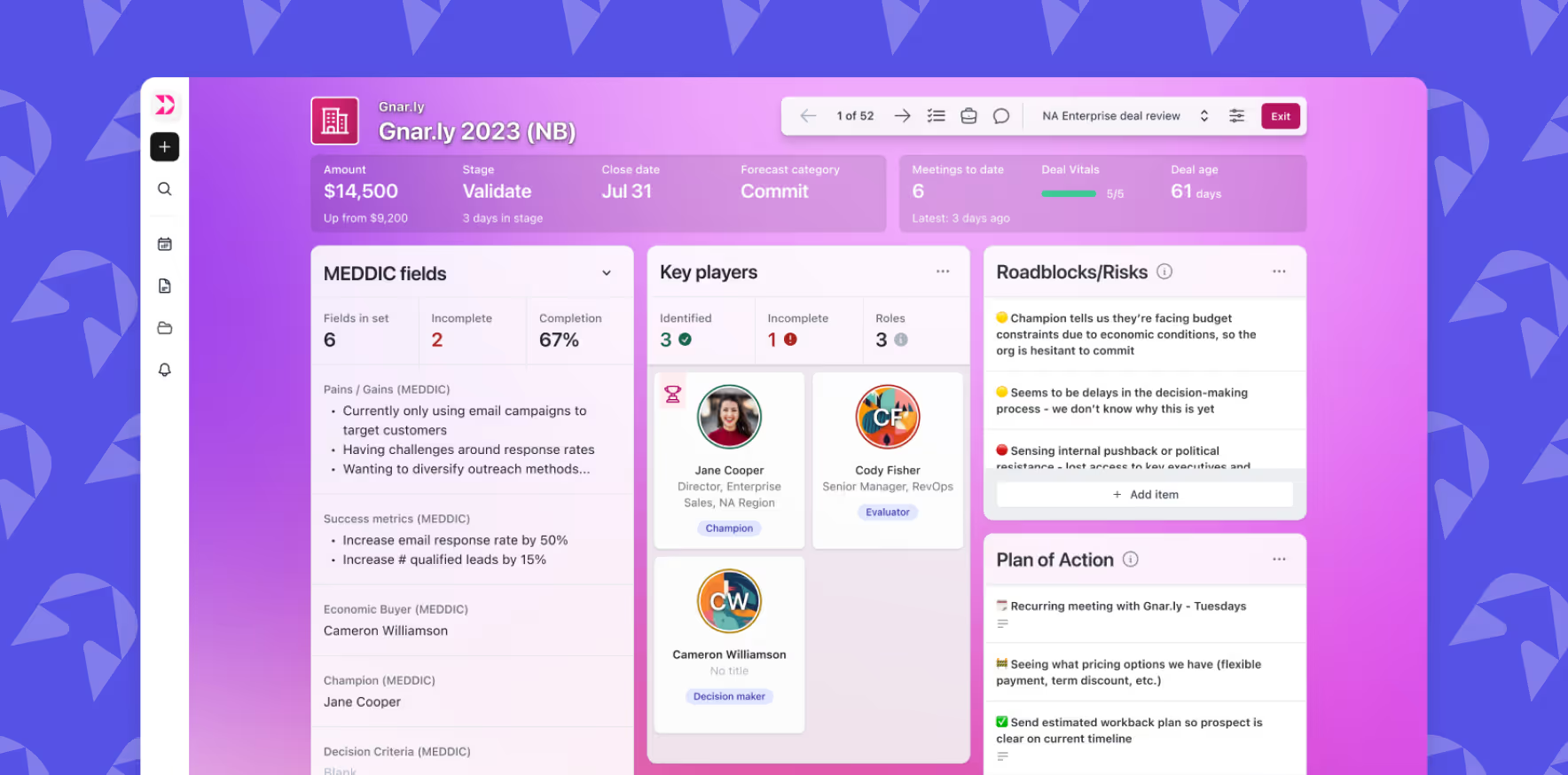

When we spoke with dozens of data and AI teams leading these efforts, we started to notice a pattern. A promising process for implementing AI typically looks like this:

A Promising Process for Implementing AI

Defining clear business objectives

- What business impact do we want to achieve?

- Example: “Increase bank efficiency by replacing front-desk customer service with AI agents.”

- Other examples: A healthcare provider looking to reduce wait times by automating common prescriptions; a retailer aiming to minimize stockouts by using AI for demand forecasting.

Defining success criteria

- How can we measure success?

- Example: “By 2026, 30% of customer service will be moved to AI-based customer service.”

- Other examples: “Reduce patient onboarding time by 20% through AI-assisted registration”; “Cut excess inventory by 15% within 12 months using AI-powered forecasting.”

Defining stakeholders

- Who are the main customers, end users, and points of contact?

- Who bridges the business and technical teams?

- Who provides support and drives adoption?

- Example: In an insurance company, claims adjusters are end users, customer service leaders are business sponsors, IT owns system integration, and a cross-functional AI taskforce mediates between teams.

Defining rollout phases

- From POC → Alpha → Beta → Private GA → Public GA.

- What do we measure to move between phases?

- How do we reduce risks?

- Example: A retail chain might start with a POC on one product category (POC), expand to a few stores (Alpha), roll out to a region (Beta), expand nationally with safeguards (Private GA), and then make it standard across all operations (Public GA).

Assembling the right teams

- Example: A global bank combines data science teams (to tune the models), compliance (to ensure regulatory guardrails), IT (for infrastructure), and line-of-business managers (to validate business outcomes).

Building and rolling out

- Example: In a healthcare pilot, this could mean training the model on validated patient records, integrating it into the doctor’s workflow, and releasing the first version in select clinics.

Iterating across phases and success criteria

- Example: A logistics company deploying AI route optimization iterates by comparing delivery-time reductions in each phase and adjusting based on real driver feedback before expanding rollout.

This process is a promising foundation, but in many cases, it still falls short.

We hear it more and more: the main challenge for AI today is not the technology - it's adoption. Good AI is no longer a tech problem. The models are already amazing, and the technology is maturing fast. The real challenge is achieving trust and driving adoption.

This is why the only way to truly capitalize on your AI investment is to measure reliability. Many initiatives stall because they lack monitoring and measurement. Without them - especially in the face of constant change - trust erodes.

As Armand Ruiz, VP of AI Platform at IBM, recently pointed out: enterprises don't fail at AI because the models aren't good enough - they fail because adoption doesn't scale without reliability and trust.

Similarly, Informatica emphasized in their blog on AI in automotive that data quality and governance are essential prerequisites for AI adoption at scale.

Most enterprises we've been speaking with are still in beta for their two or three main AI initiatives. The vision is clear, and the proof of concepts are promising. Yet something prevents them from scaling and driving real adoption: trust and reliability.

Just this week, a Wall Street Journal article revealed how Taco Bell is rethinking its drive-through AI deployment after viral customer complaints about reliability issues, including systems crashing when processing unusual orders. This real-world example perfectly illustrates our point: without robust reliability metrics and monitoring, even major implementations with millions of processed orders can quickly lose customer trust. The consequences of unreliable AI aren't theoretical - they're playing out in public, eroding brand confidence and forcing companies to reconsider their AI strategies.

The pillars of AI Reliability

AI reliability is not just about making sure your model is available. It’s about ensuring the entire lifecycle - from data to model to output - is trustworthy, measurable, and aligned with business goals.

.avif)

Here are the key building blocks:

1. Visibility

You can’t manage what you can’t see. Granular, end-to-end visibility is essential:

- Data → Model & Prompt → Output — understand the full chain.

- Which data sources are feeding each agent?

- Which tools, automations, and teams rely on these agents?

- Where are the weak links?

Visibility ensures you know what’s happening inside the black box of AI, so you can trace issues back to their root causes.

2. Governance

AI without governance is a liability. You need frameworks to prevent risks and ensure compliance:

- Prevent sensitive data from being ingested, trained, or exposed in outputs.

- Verify whether input data is a source of truth versus duplicated or inconsistent sources.

- Avoid proliferation of “shadow AI agents” where different teams rely on inconsistent, ungoverned systems.

Good governance isn’t about slowing innovation; it’s about scaling responsibly.

3. Quality

AI outputs are only as good as the data and prompts that power them. That means:

- Monitoring and measuring the quality and accuracy of inputs (garbage in, garbage out).

- Monitoring and measuring the quality and accuracy of outputs across dimensions like correctness, completeness, bias, and clarity.

Defining what “quality” means for your use case—and measuring it consistently—is at the core of AI reliability.

4. Usage & Usefulness

AI reliability also means adoption. If your AI is “technically working” but nobody uses it, it isn’t reliable. Ask:

- Is it being used?

- Who is using it?

- How often? Do users really rely on its outputs?

- Is the output useful? Does it represent an accurate view of reality?

Tracking usage and usefulness ensures you’re building systems people trust enough to integrate into their daily work.

The Path Forward

Enterprises know the promise of AI, but scaling from beta pilots to enterprise-wide adoption requires reliability and trust as first-class priorities.

That means:

- Visibility into the entire AI pipeline.

- Governance to prevent risk and inconsistency.

- Quality monitoring of inputs and outputs.

- Usage and usefulness tracking to ensure adoption.

Without these, AI initiatives stall in proof-of-concept limbo. With them, enterprises can move confidently through rollout phases and unlock the full business value of AI.

The bottom line: the main challenge for AI today is reliability. Technology is no longer the bottleneck. Processes, trust, and adoption are. And the foundation for adoption is reliability.

This is why the only way to fully capitalize on your AI investment is to measure reliability and adoption - making AI not only powerful, but trusted and embedded into the way your business operates.

Our AI reliability platform is designed to address these challenges directly, providing the visibility, governance, quality monitoring, and usage metrics enterprises need. To learn more about how Elementary can help you achieve AI reliability, schedule a call with our team.

FAQ

What is AI reliability?

AI reliability refers to the trustworthiness, consistency, and business alignment of an AI system—across data, models, prompts, and outputs. It’s not just about uptime; it’s about whether the system produces useful, accurate, and expected results over time.

Why is AI reliability important?

Without reliability, AI adoption stalls. Even the most powerful models won’t be used if their outputs are inconsistent, hard to trace, or misaligned with business goals. Reliability enables trust, and trust drives adoption.

What are the components of AI reliability?

The four key pillars are:

- Visibility – full pipeline observability from data to output.

- Governance – controls to prevent risk, enforce compliance, and align teams.

- Quality – monitoring of both input and output accuracy.

- Usage & usefulness – adoption metrics that show whether people rely on the AI.

How do you measure AI reliability?

Measuring AI reliability means tracking:

- Data quality and test coverage

- Output accuracy, completeness, and clarity

- Prompt lineage and model versioning

- Usage metrics across tools and teams

- Governance adherence and risk events

What causes enterprise AI projects to fail?

Most failures happen not because of bad models, but due to:

- Lack of visibility into AI pipelines

- No ownership or governance

- Poor monitoring of outputs and usage

- Teams not trusting or using the AI outputs

How can you scale AI reliably across the enterprise?

To scale AI successfully, enterprises must embed reliability into every rollout phase. That includes aligning stakeholders, measuring success at each phase, and maintaining consistent monitoring across data, models, and outputs.

How does Elementary help with AI reliability?

Elementary provides the observability, governance, and quality monitoring needed to make AI systems reliable and scalable.

With Elementary, you can:

- Monitor the quality of input data across your AI workflows

- Track reliability metrics like agent health, hallucination and failure rates, user feedback, and data quality across freshness, completeness, volume, and validity

- Define and enforce governance rules (e.g., prevent PII exposure, tag critical data assets)

- Track adoption and usage of AI outputs by teams and tools

- Identify reliability issues before they erode trust or cause failures

Whether you're powering agents, copilots, or internal LLM tools, Elementary helps you make AI reliable enough to trust—and measurable enough to scale.