AI chat is everywhere now. Whether it's answering customer questions or helping users navigate your app, it's turning into a must-have feature for modern products. So when we decided to build one into our product, we wanted to do it quickly - but also in a way that fits our Python-first backend.

Vercel’s open-source SDK made it easy to build a powerful AI chat frontend - but getting it to work with our Python backend took some experimentation. What started with a Node.js PoC ended with us creating a fully compatible, Python-native SDK that is compatible with Vercel’s frontend SDK and protocol.

And since not every team uses our exact stack of FastAPI and LangChain, we designed it to be modular and extensible, making it easy to plug in other agent or API frameworks. Here’s the story of how it came together - the making of the Open Source package py-ai-datastream.

What We Are Building

We’re Elementary Data, a data observability platform built for modern data teams. Our mission is to help organizations monitor, understand, and improve their data pipelines with minimal friction.

AI plays a big role in our product vision. We’re building several AI-powered agents to streamline and automate common data engineering workflows, such as:

- Triage and resolution agent: Diagnoses and suggests fixes for data issues in real time.

- Governance agent: Detects missing documentation, stale datasets, or unclear ownership.

- Catalog agent: Helps understand and explore your data catalog with ease.

- Test Coverage Agent: Find and resolve test coverage gaps.

- Performance and cost agent: Identifies redundant or costly transformations.

To support these use cases, embedding an intuitive and powerful AI chat experience into our platform was the next logical step.

Here’s what that experience looks like in action:

Background and Terminology

Before diving into the details, here’s a quick primer on some of the core concepts and terminology used throughout this post:

- Agent: A decision-making component—often built using frameworks like LangChain—that interprets user input, selects which tools to invoke, and synthesizes a final response. Agents are typically responsible for orchestrating multi-step reasoning and task execution in AI workflows.

- Tool: A discrete function or capability that an agent can call upon to perform a specific task, such as querying data, executing code, or triggering an API. Tools are often modular and are registered with the agent as part of its action space.

- MCP (Model Control Plane): A process responsible for executing model logic, tool invocations, and orchestration flows. It acts as a controlled environment where agents can run reliably and securely, often interfacing with other backend systems and data services.

- AI Streaming: A method of delivering AI-generated output (typically token-by-token) over an HTTP stream to the frontend, allowing responses to appear incrementally. This improves perceived latency and enables richer, real-time interactions in chat interfaces.

The First Attempt: Fast but Fragile

To get something working quickly, we spun up a PoC using Vercel's excellent vercel/ai SDK. It provides a clean, intuitive API and great streaming support, making it easy to get started quickly, while still providing a smooth AI chat experience.

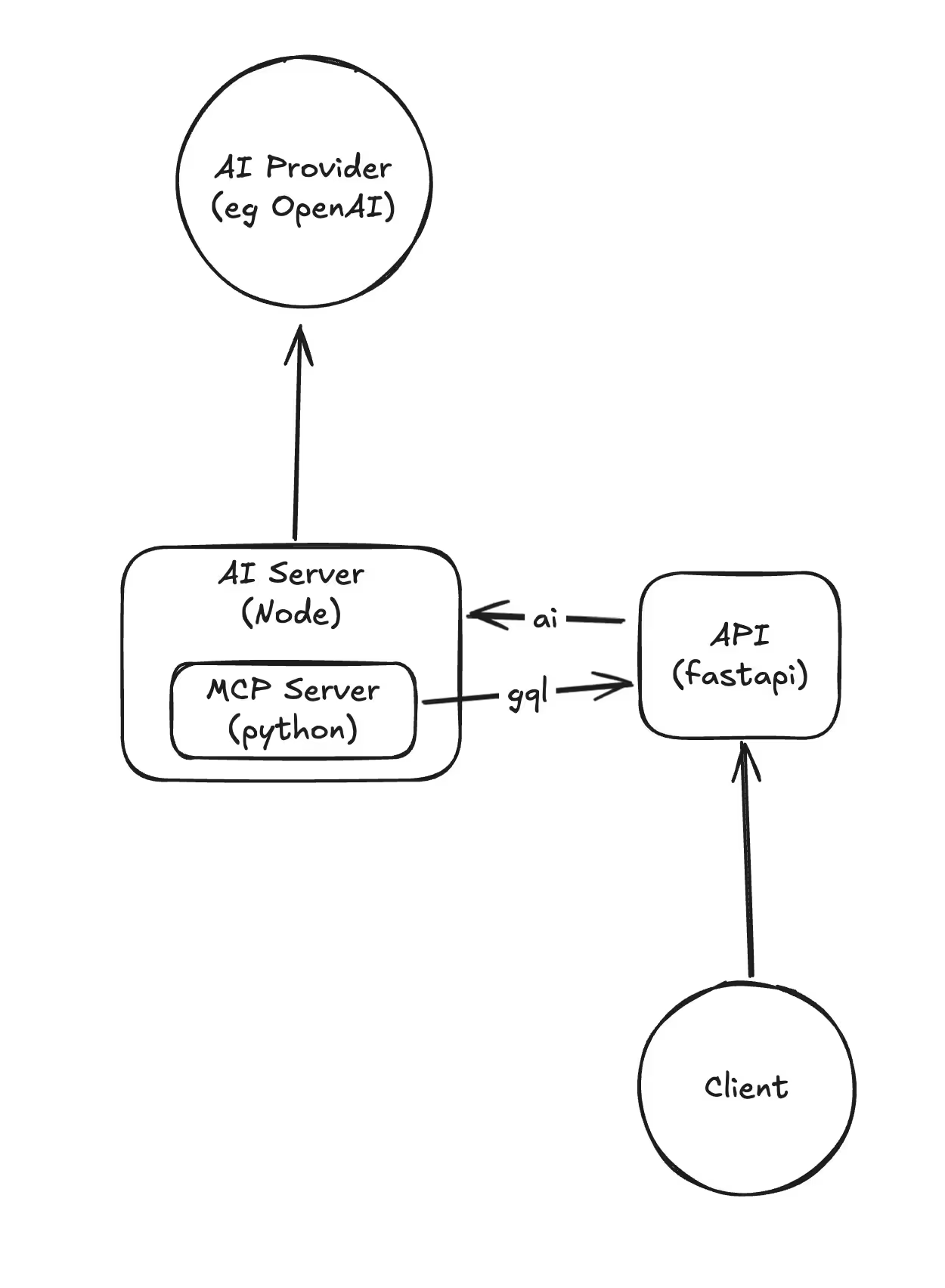

We created a new Node.js server (which we called the "AI server") that used this SDK to manage model communication. But our backend was in Python, and we needed access to our business logic. To work around this, we ran a Python process (our MCP server), which provided tools that required access to said logic, alongside the Node server. and piped messages between them.

To make this whole thing work with our existing user management, we had our main Python API server forward requests to the AI server after handling authentication and authorization. The AI server would then stream the response back through the original request chain.

With GraphQL acting as our semantic layer - and living inside the API server - our tools started querying it midflow. This led to recursive request loops and repeated auth checks, compounding the deployment complexity even further.

It was messy, but it worked. We had a working AI chat that we could play around with in record times.

The Deployment Headache

What started as a quick win quickly became a tangled mess:

- We were managing two different runtimes: Python and Node.

- Every AI request had to take a detour through our main API server.

- Deployments became brittle. Testing and scaling were harder than they needed to be.

- Our use of GraphQL as a semantic layer introduced circular queries and redundant authentication flows, compounding the complexity.

The Vercel SDK was great, but it wasn’t built to integrate easily into a Python/FastAPI backend. We wanted a cleaner solution that played nicely with our stack.

Digging into the Protocol

While it was clear that we need a Python-based backend, we did not want to lose the significant benefits we got from Vercel’s SDK on the Frontend side. So we asked ourselves - what if we could keep the frontend exactly the same, and just make the backend speak the same protocol?

Fortunately, Vercel’s Data Stream Protocol is well-documented (you can find the docs here). We studied the spec and realized that we could implement a compatible backend ourselves - in Python.

The way it works is that the server send an HTTP event stream, where each event is in the following format: {event_type}:{data} , where

event_type- single character representing a type of event (eg,0for text,9for tool call)data- json serialized data, which depends on the event type

An example stream of a weather assistant agent might look something like this:

f:{"messageId": "123"}

0:"Hello, i am a help"

0:"full Assistant that can ch"

0:"eck the weather, let me do that"

9:{"toolCallId": "tool-123", "name": "get_weather", "args": {"location": "San Francisco"}}

a:{"toolCallId": "tool-123", "result": "The weather in San Francisco is sunny"}

e:{"finishReason": "tool-calls", "usage": {"promptTokens": 10, "completionTokens": 20}, "isContinued": false}

f:{"messageId": "456"}

0:"The weather in San Francisco is sunny"

e:{"finishReason": "stop", "usage": {"promptTokens": 10, "completionTokens": 20}, "isContinued": false}

d:{"finishReason": "stop", "usage": {"promptTokens": 10, "completionTokens": 20}}

Our goals:

- Fully support the Vercel protocol so the React frontend doesn’t need to change.

- Integrate LangChain natively.

- Simplify our deployment to a single Python server.

Building the Python-native Version

We implemented the protocol using FastAPI and LangChain, ensuring compatibility with the event-streaming behavior expected by the Vercel SDK. Our implementation faithfully reproduces the data stream format and lifecycle Vercel’s frontend tooling requires - including support for tokens-as-they-arrive streaming and final completion signals.

We also designed the system to be modular and framework-agnostic where possible, making it easy to implement different agent or api frameworks if needed. The result is a maintainable, Python-native backend that integrates seamlessly with both our stack and Vercel-powered frontends.

.avif)

Open-Sourcing the Solution

We realized this problem isn't unique. Many teams use FastAPI for their backend, and plenty of devs are trying to hook up AI features with modern frontends.

So we decided to open-source our implementation.

It includes:

- A FastAPI server that speaks Vercel's Data Stream Protocol

- LangChain integration out of the box

- A clean architecture ready for production

We built the core to be framework-agnostic. FastAPI and LangChain are supported out of the box, but extending it to other API frameworks (like Django or Flask) or agent runtimes (like Pydantic-AI) should be easy. Contributions and suggestions are welcome!

https://github.com/elementary-data/py-ai-datastream

We’ve shared this work so others can skip the duct tape phase and focus on building great AI-powered features - faster and more cleanly.

Takeaways

- Prototyping quickly is great - but watch for long-term complexity.

- Sometimes the best solution isn’t switching tools, but understanding them better.

- Open standards (and great documentation) enable interoperability.

We went from a messy dual-runtime deployment to a clean Python-native AI chat integration. If you're working with FastAPI, LangChain, or Python in general, and want streaming AI responses without the glue code, check out our repo - and let us know what you think!

.avif)