Tell me if this sounds familiar: You are looking for a particular dataset, and after asking around (or in a better situation searching in your data catalog), you have found what you are looking for. Or at least you think it is what you need, based on the little information available about this particular dataset. But when was the last time this was updated? Let’s run:

select max(datetime) from our_table

Are there any other issues? Is this key unique?

select customer_id, count(customer_id) from our_table group by 1 order by 2 desc

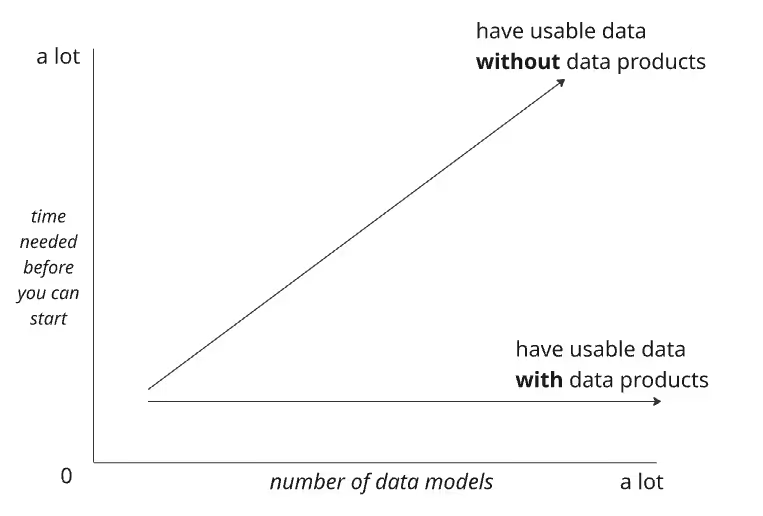

You get the point. It can take hours to prepare before you can actually start doing your analysis or before you can start training your ml model.

After deciding if this data is healthy enough, what do these column names actually mean? ind_acc_2? Sounds less like a metric and more like a password I forgot in 2014? Who owns this data? Any known issues? Who is responsible? Now imagine every Data Analyst, Data Scientist, BI developer has to repeat this process individually. What a waste of time.

This is how it often feels like:

This reflects what I saw in previous jobs and currently with our customers, and why we solve this by using product thinking around our data. In other words, how and why we built data products. Building a network of these data products eventually creates our data mesh.<- click for an interesting article on the subject.

In this post I want to give a behind-the-scenes look at how we build what we call Trusted Data Products with our customers and how to create guidelines (or better yet; rules we can enforce with automation). We can have very theoretical discussions on what Data Products are, but to make this practical, we will focus on the key elements of a Data Product:

- Clear descriptions and definitions of tables and columns

- Tested Data

- Ownership

- Monitoring

In this post I will walk you through how we are creating a data product around our marketing data, and specifically, I will focus on a dataset cpa_and_roas. What we calculate here are two important metrics: cost per acquisition (cpa) and return on ad spend (roas).

Clear Descriptions and Definitions

We are starting with clear descriptions and definitions because this serves two major purposes. First it will allow any of our downstream consumers to very easily understand the data they want to use. The other purpose is to make sure any AI running on your data understands the context. We see that this becomes more and more important, especially when we start using tools like MCP Servers on top of Elementary.

Below you see how to filter on models missing descriptions and how to add these descriptions quickly (with some help from our AI):

All of our governance and test configurations are stored in code. We keep your codebase as the single source of truth because the data platform needs one central place for everything.

All governance and testing rules live in code, so there’s no duplication or confusion between teams. As the platform grows, this is the only way to scale while staying consistent. Everyone works from the same definitions, and every change is tracked and aligned with the rest of the platform. Your catalog, data warehouse, and other tools in your data platform all use this as a single source of truth.

Tested data

Now that we have good definitions, the next step is to ensure that we are testing this model itself, but just as importantly, testing all models upstream of it.

We are going to use the Test Coverage feature to fully understand our level of testing. This shows you the test coverage for each model broken down by the different data quality dimensions. <- Click to see how the UK government uses these dimensions.

To dramatically increase our test coverage we can select models in bulk and add tests to our upstream models. This way we make sure that we have full coverage on the complete lineage of our data product.

If you are unsure on which tests to add, you can have a look at our best practices guide.

Another really helpful feature is to use our AI test recommendations Agent. This agent has been trained on creating reliable tests and using the full context of your codebase to make sure you implement a robust testing strategy while being efficient. In the video below you will see how we are asking the AI Agent to add upstream tests.

Ownership

We can’t have a proper data product without a clear owner. Someone needs to be responsible not only for building the product but also for maintaining its value.

We can assign ownership at an individual level or at the team/domain level. Doing it on an individual level has the advantage that it is really clear who should own it and for others - who to contact in case there are any issues. On a team level, the advantage is having multiple points of contact so there are no issues when people take breaks or leave the company. It is really simple for anyone to filter on- and update the ownership.

This structure makes it clear who to contact when something breaks and ensures that every product has someone invested in its success.

Monitoring

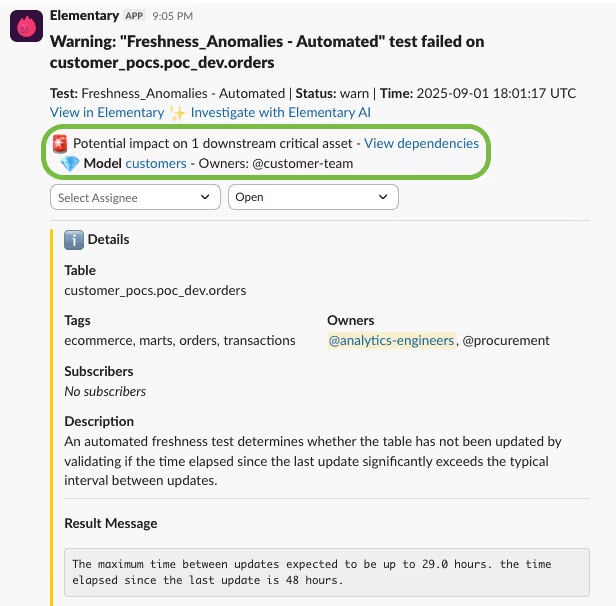

Elementary has an extensive alerts and incident feature. In this specific post I want to focus on our critical assets. Ultimately, what we really want here is to be alert if there are any issues with our critical assets. We will mark our data products as critical assets in the platform. This will allow us to filter on our critical assets in our dashboards and catalog.

What this allows us to do is to say “We want to be alerted if any issue is impacting a critical asset downstream”. Doing this is extremely powerful because we are now not just testing the tables we are exposing as a data product, but the full lineage of these models.

You can see in the green detail what this looks like in our alerts:

Because we have set the ownership, it is now visible for anyone subscribing to this incident to know who to ask questions and potentially warn about issues.

Dashboards

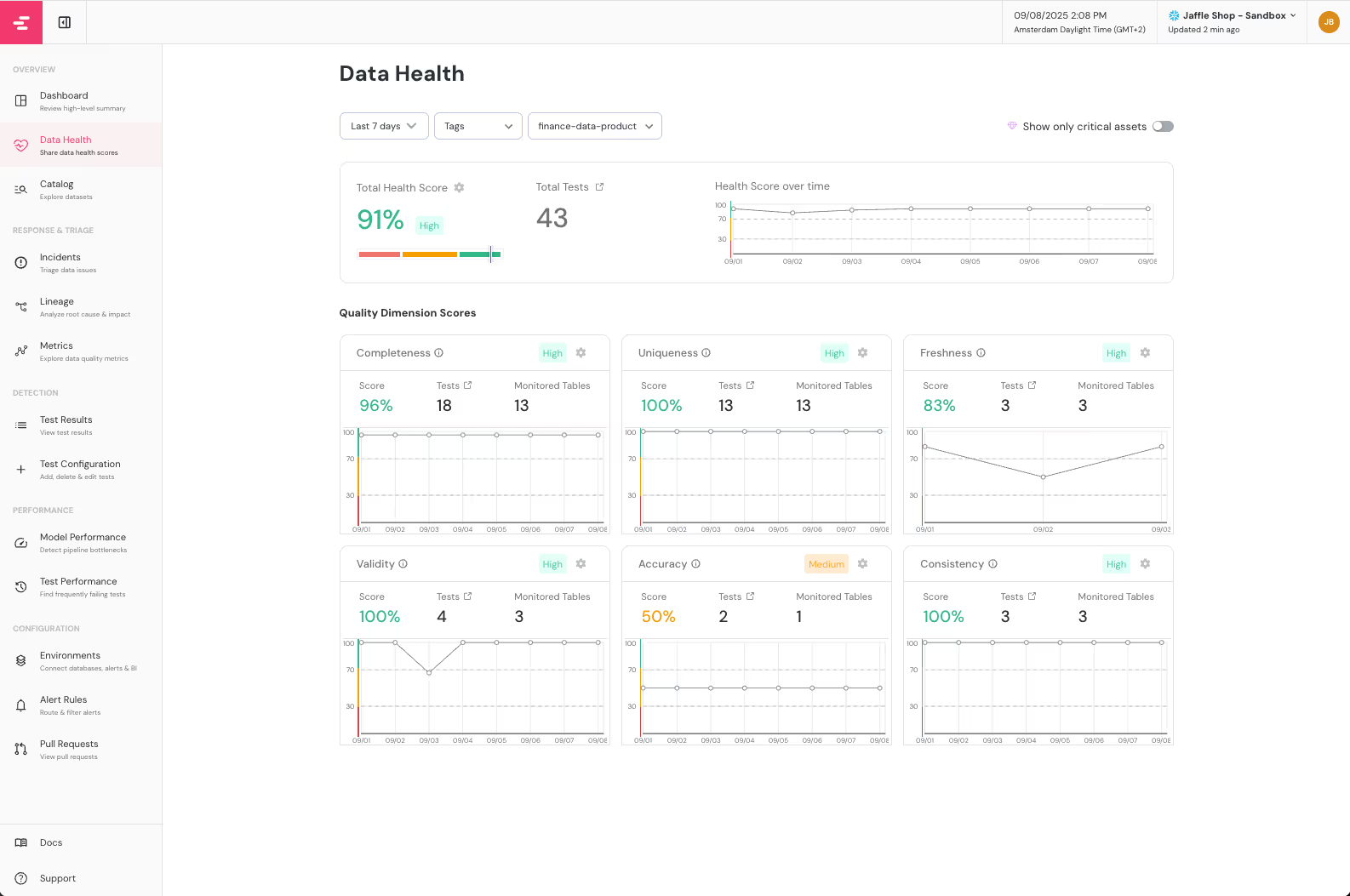

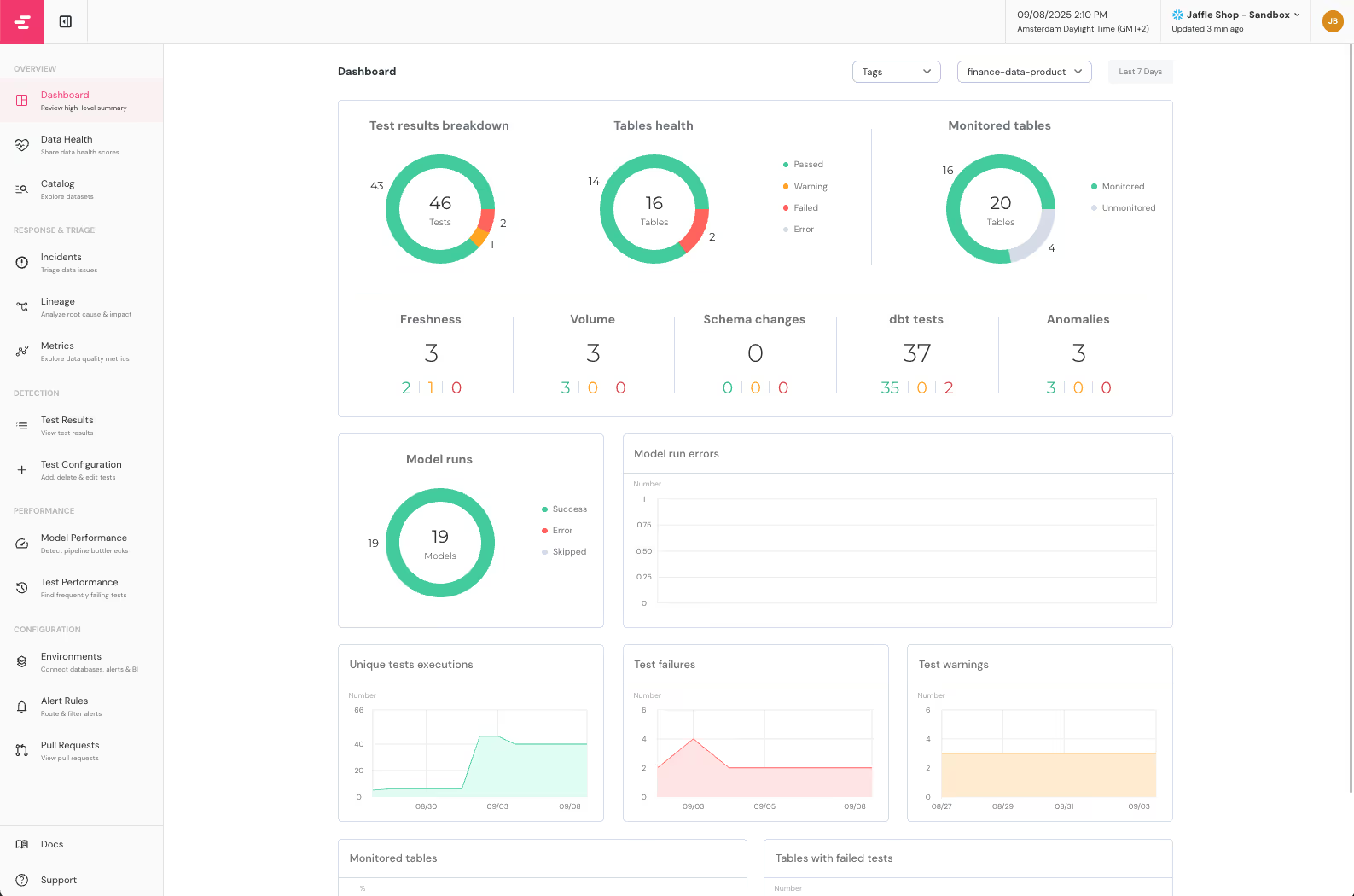

Having done this work allows us to create the bridge between our data producers and all of our data consumers:

- Descriptions allow our consumers (Data Analysts, Data Scientists, etc.) to quickly understand the data.

- Tests will show any of our consumers that the data is tested and healthy. For example, you can share the data health dashboard for a particular data product:

For data producers, we can easily understand the current state of our tables and tests in the executive dashboard

Playbooks

Not only is it important to get a healthy data platform, but we also want to make sure that moving forward, we want to create continuous robustness. This means we want to have certain rules in place that we can either enforce or use as guidelines. What should we minimally add as tests and governance when we add a new model? Uniqueness checks on primary keys? When we add a new test, what should I take into account?

Example checklist:

- [ ] New/changed models include `meta.owner: <team-or-owner>`

- [ ] Model + new columns have descriptions

- [ ] Tests: `unique`/`not_null` on primary key, `not_null` on critical columns

- [ ] Tags applied (e.g., core/pii/domain)

Often, this is just as much a technical question as it is an organizational one. In order to solve both, we often create what we call a playbook with our customers. We start with a template and customize it to the organization we work with.

If you would like to get a copy of the playbook, feel free to email me at joost@elementary-data.com.

Often it helps to automatically enforce some of these rules. There are a couple of ways to do this, either in your CI/CD pipeline, or we can utilize our MCP server in combination with rules we give the AI agent.

Curious about your thoughts! You can email me directly at joost@elementary-data.com or book an intro call with me where we can discuss your specific use cases!

.avif)