Most data reliability work happens outside the daily workflow. Investigating an incident often means switching between the warehouse, dbt, logs, reliability tools such as Elementary, and your IDE. Refactors and schema changes require manual checks across downstream models and dashboards.

The Model Context Protocol (MCP) provides a standard way for AI systems to call external tools. For data teams, that means an IDE or agent can request context from dbt, Snowflake, BigQuery, or a reliability platform like Elementary directly.

This article explains:

- What is MCP

- How MCP works

- How MCP helps with data reliability

- Concrete examples (and demos)

What is MCP?

MCP (Model Context Protocol) was released by Anthropic. It’s a standard that allows large language models to call external tools in a structured way. Instead of asking an AI to “guess,” the model can query a connected MCP server to return a grounded result.

For example, an IDE like Cursor or a tool like Claude Code can connect to different MCP servers: Jira, GitHub, Snowflake, or Elementary. Each server exposes its own tools. When the model sees a request, it knows which tool to call and how to interpret the response.

This changes how data reliability work gets done. Instead of switching between lineage views, warehouse logs, and incident trackers, the engineer’s IDE can pull all of that context directly.

How MCP works

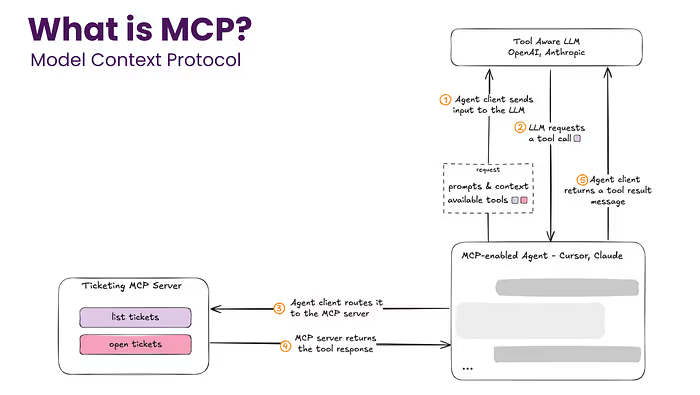

The flow is simple but powerful:

- Request from the user: You type a question or command in an MCP-enabled client such as Cursor or Claude Code.

- Sent to the model: The client sends your request to the LLM provider (e.g. Anthropic, OpenAI) along with a list of available MCP servers and the tools they expose.

- Tool selection: The model decides which tool is relevant and issues a tool call.

- Server response: The client forwards that call to the MCP server, which queries the system it represents (e.g. dbt, Snowflake, Elementary, Jira).

- Result returned: The response is passed back through the client to the model, which can then use it to answer your question or continue the workflow.

Example:

- You ask in your IDE: “Which Jira tickets are assigned to me?”

- The request goes to the model with the list of connected servers.

- The model selects the Jira MCP server and calls the

list_ticketstool. - Jira returns the tickets, which are then passed back into the model.

- The IDE shows you the result in context.

This same flow works with data tools: asking about lineage, incidents, or pipeline performance using the Elementary MCP server; asking about tasks might use GitHub or Jira; querying a dataset could hit Snowflake or BigQuery.

By standardizing this flow, MCP removes the need for custom connectors. Any client can talk to any server, which is why adoption is growing quickly across IDEs, data tools, and productivity platforms.

How MCP helps with data reliability

1. Preventing breaking changes

The problem

Data teams often rely on production systems such as Postgres or MySQL, which software engineers typically maintain. The challenge is that those engineers may not know how the data is consumed downstream. Since they are not responsible for the dependencies in the data stack, a schema change in a production database can unintentionally break pipelines, models, or dashboards for the data team.

With MCP

- A software engineer makes a schema change in an operational database, like Postgres.

- Their IDE is connected to the Elementary MCP server.

- Before merging, the IDE queries the column‑level lineage tool and data contracts tool.

- It surfaces all data assets and dashboards that would be affected downstream.

This prevents silent breakages that data teams usually discover days later.

Why it matters

Breaking schema changes are one of the most common causes of failed pipelines. MCP makes lineage and data contracts checks a built‑in step in development, not a reactive scramble afterward.

2. Column refactoring

The problem

Column or table refactors can cascade into hundreds of downstream changes. A simple rename can break downstream data assets, tests, and BI dashboards. Many teams have reported spending weeks on these manual changes.

With MCP

- The IDE queries column‑level lineage and column, table, and BI‑asset usage stats from the MCP server.

- It generates the required edits in SQL, YAML, and tests.

- It lists impacted BI assets for additional review.

Why it matters

Refactors are necessary for maintaining pipelines, but they’re often delayed because of risk. By automating the heavy lifting, MCP reduces weeks of work to an afternoon project. This isn’t just convenience; it enables data teams to keep up with the business dynamics.

3. Optimizing cost and performance

The problem

Pipelines breach SLAs when certain tasks take too long. Identifying the true bottlenecks requires digging through logs and execution histories, often across multiple systems.

With MCP

- The IDE asks the MCP server for execution times, results, lineage, usage stats, and data warehouse metadata.

- It identifies the critical data elements and tasks for optimization.

- It proposes SQL and data warehouse optimizations, and it flags unused data assets for removal.

Why it matters

Pipeline performance isn’t just about speed and SLA, which of course are highly critical. It’s also about cost. Long runtimes drive up warehouse costs.

Multiple teams we’ve worked with successfully cut hours from their daily runtimes simply by dropping unused data assets flagged by MCP context. That also meant thousands of dollars in saved compute costs.

4. Faster incident resolution

The problem

Incidents are painful to investigate. When an alert fires, you need to check lineage graphs, query execution logs, and commit history. The context is scattered, and Mean Time to Resolution (MTTR) often stretches into hours.

With MCP

- You paste the alert into your IDE.

- MCP pulls incident details, related alerts, lineage, and recent changes.

- The IDE traces the root cause upstream and even suggests a fix.

Why it matters

MTTR is a critical reliability metric. Lower MTTR means fewer data outages and happier stakeholders. MCP makes investigation a single-step workflow instead of a scavenger hunt across systems.

As an example, an anomaly in a marketing model was traced back to inconsistent unit conversions (cents vs. dollars). Using the MCP’s context, the IDE surfaced the mismatch and proposed a fix in minutes.

5. Closing coverage gaps

The problem

Generated tests often miss the real risks because they rely only on static code. They don’t know about how data is actually used and consumed in production or which assets are critical.

With MCP

- Test recommendations are informed by incident history, usage stats**,** and patterns and downstream dependencies.

- The IDE is now aware of how the data is sliced and diced by consumers.

- The IDE can automatically add tests and Elementary’s anomaly monitors grouped by the dimensions that are acutely used by consumers.

Why it matters

Coverage is much more useful if it matches how data is consumed. For example, a key business metric may look fine in aggregate but fail for a specific dimension like a region or country. MCP ensures that tests align with real-world consumption and breakdowns, not just high‑level monitors that catch only global issues.

6. Trustworthy BI and AI agents

The problem

AI analytics agents and BI bots can query data, but they lack context about freshness, quality, or governance. It seems promising as data consumers can ask a question and get an answer immediately, but the quality and trust fall short. More often than not, results are simply unreliable.

With MCP

- Before answering, the AI agent queries the MCP for freshness, quality scores, incidents, documentation**,** and usage.

- It only returns results from approved, up-to-date sources.

- If the data isn’t trustworthy, the agent can say so.

Why it matters

Trust is the bottleneck to adoption. MCP brings transparency into BI and AI workflows by embedding data reliability checks before results are shown.

How MCP is different from past approaches

In the past, data teams used SDKs, APIs, and custom plugins to connect systems. These worked, but each integration had to be built and maintained separately. Each tool needed its own connector, which doesn’t scale.

MCP takes a different approach. It’s a protocol, not a plugin. The specification is open, so once a server is implemented, any MCP-compatible client can use it. That means tools can expose their systems once, and any IDE or agent that speaks MCP can consume it.

The benefit is interoperability without glue code. You can connect Cursor to Jira, GitHub, Snowflake, dbt, or Elementary in the same workflow, and the model will know how to route requests.

Adoption is already underway in the data ecosystem:

- Snowflake provides MCP servers for Cortex Agents, enabling structured access to data through AI tools.

- BigQuery supports MCP through the MCP Toolbox.

- dbt has released an MCP server that exposes lineage, metadata, and metrics.

- Cursor, Claude Code, and ChatGPT Developer Mode are MCP clients, bringing this context directly into IDEs and conversational agents.

A step toward self-healing data pipelines

Reliability in data isn’t just about detecting errors. It’s about reducing the manual effort to prevent and resolve them. MCP is an inflection point because it makes context portable. It moves reliability from dashboards on the side into the daily tools of engineers and analysts.

At Elementary, we’ve built an MCP server for data reliability, but the broader point is this: MCP enables workflows that were previously impossible. Preventing breaking changes, accelerating refactors, reducing MTTR, and building trust in analytics agents are just the beginning.

With MCP, context moves into the workflow itself. That shift takes self-healing pipelines and reliable AI and BI agents from aspirational to practical.