As data practitioners, we all know how powerful testing can be. However, many of us struggle with when to use different kinds of tests. Assertion and anomaly tests are great examples of this, as they each serve different purposes in ensuring high-quality data in dbt projects.

Anomaly detection tests are found less often in dbt projects due to the lack of understanding. However, they hold so much power in detecting trends in your data and can fill a gap that assertion tests cannot.

In this article, we will discuss:

- The difference between assertion and anomaly detection tests

- When to use each type of test

- The elements of an anomaly test

- Elementary anomaly test examples

- Best practices for setting them up in Elementary.

By the end of this comprehensive guide, you will clearly understand the value of anomaly tests and feel confident setting them up in Elementary.

You can also watch the Anomaly Detection Tests webinar which covers everything in this guide.

Anomaly Detection Tests - Elementary OSS vs. Elementary Cloud

Before we dive into this guide, it's important to understand that both Elementary OSS and the Elementary Cloud Platform offer anomaly detection tests. However, there are significant differences in implementation.

There are two types of anomaly detection tests:

- Pipeline health monitors - Monitor the pipeline runs, ensuring timely and complete data ingestion and transformation. These monitors monitor metadata to detect volume and freshness issues.

- Data quality metrics tests - Run as part of the pipeline, collect metrics by querying the data itself. These include various data quality metrics such as nullness, cardinality, average, length, etc.

You can find a detailed comparison between the implementation of these tests in Elementary Cloud and OSS in our docs.

The guide below focuses solely on data quality metrics tests.

Data tests: assertions vs. anomaly detection

When we think of data validation tests, there are two main types of tests- assertion tests and anomaly detection tests.

Assertion tests

Assertion tests validate a true or false explicit expectation about your data. As a dbt user, these are tests that you are most likely already familiar with. They include dbt generic tests such as unique, not_null, accepted_values, and relationships tests. Assertion tests are also the main tests included in popular testing packages like dbt_utils and dbt_expectations.

Assertion tests are great for enforcing expectations about what you already know to be true about your data. However, they aren’t a fit for many cases where data is dynamic and your expectations are not static.

For example, let’s say you want to add a test to assert the expectation of a certain row count for a growing data table. You could use the expect_table_row_count_to_be_between test from dbt expectations and set a static min_value and max_value.

models:

- name: growing_volume_table

tests:

- dbt_expectations.expect_table_row_count_to_be_between:

min_value: 1

max_value: 450000

However, because the data table is constantly growing, you will most likely have failures that occur in your pipeline, requiring you to update the static values. This is a perfect example of when you’d want to use an anomaly test that scales as the data grows.

Anomaly detection

Anomaly detection tests validate new data in a table by comparing it to old data. With these types of tests, you don’t need to know what to expect exactly but can set the tests up in a way that adjusts to the trends in your data. A great example of these are anomaly detection tests available through Elementary.

Anomaly detection tests are good at detecting outliers in the data, but not explicit failures. This is why they are the perfect type of test to complement assertion tests already in your dbt project.

Keep in mind that assertion tests need to be configured well to work for your dataset and use case. Later in this article, we will discuss the different parameters needed to configure and customize these tests in Elementary.

When to use assertions vs. anomaly detection

Now that you understand the difference between assertion and anomaly tests, let’s discuss when to use each one in your dbt project.

As a rule of thumb, apply an anomaly detection test when you want to know if the data is consistent or if there are any trends.

Let’s pretend we are analytics engineers for an e-commerce shoe company. In one of our models we have a derived metric called purchase_sumthat helps stakeholders make marketing and product decisions on our website. Because this metric is used heavily by decision-makers, we want to thoroughly test it to ensure high-quality data.

We start by adding a not_null test to the field. However, this doesn’t ensure confidence in finding issues in this derived column. It doesn’t help us if purchase_sum passes this test but is negative or obscurely high. The test wouldn’t catch the deviations from the norm that we would want to be notified of.

models:

- name: order_sizes

columns:

- name: purchase_sum

tests:

- not_null

Next, we add a test from dbt expectations, expect_column_values_to_be_between, that requires a minimum and maximum value to be set on the field. We are confident that this field should always be over 0, so we can set that as the minimum value. However, we aren’t sure what the maximum value should be due to different factors like price changes, new items, inflation, and sales periods.

models:

- name: order_sizes

columns:

- name: purchase_sum

tests:

- dbt_expectations.expect_column_values_to_be_between:

min_value: 0

max_value: 63

While this test is more helpful than the last, it will most likely fail in situations that deviate from the normal purchase sum amount. For example, you will most likely need to adjust these values when there are large sales on the website or new product releases.

Luckily, there is a solution that takes all of these considerations into account. Instead of using dbt_expectations, Elementary’s column_anomalies assertion test could be applied in this scenario. Using this test we would need to specify a timestamp field called purchase_time and max, average, and sum as the column anomalies.

models:

- name: order_sizes

columns:

- name: purchase_sum

tests:

- elementary.column_anomalies:

timestamp_column: purchased_at

column_anomalies:

- min

- max

- average

This configuration will then help us detect trends in the change of purchase_sum over time. Now, rather than needing to adjust hard-coded values in our tests, the detection values will adjust with the trends of our data.

We will walk through how to configure the parameters in the anomaly detection tests later in the guide.

Elementary anomaly detection examples

What type of anomaly detection tests are available in Elementary? Here are a few use cases of when you’d want to use anomaly detection tests and the specific Elementary tests you’d apply.

Detecting spike/drop in row count or data delay

In this case, you would want to use freshness_anomalies and volume_anomalies.

Freshness anomalies monitor the freshness of your table over time, as the data changes. This ensures new data is always being added to your table at the rate you expect.

Volume anomalies monitor the row count of your table over time by time bucket. By applying this test you can keep track of any drops or spikes in the amount of data being ingested.

models:

- name: order_sizes

tests:

- elementary.freshness_anomalies:

timestamp_column: purchased_at

- elementary.volume_anomalies:

timestamp_column: purchased_at

Detecting issues in the quality of column fields like spikes in nulls, string length, or a daily average of numbers

When dealing with the values in your columns, it is best to use column_anomalies and all_column_anomalies.

Column anomalies monitor specific columns and allow you to detect anomalies on key metrics such as null_count, missing_percent, and average. This is a great one to use if you have a small subset of columns you wish to test.

All column anomalies work similarly but instead, detect anomalies on all of the columns in a table.

models:

- name: order_sizes

tests:

- elementary.all_column_anomalies:

timestamp_column: purchased_at

column_anomalies:

- null_count

- missing_percent

- average

Detecting anomalies in the distribution of values within a column

To test the frequency of values in a dimension, you need to use the dimension_anomalies. test. This test counts rows by the dimension specified, alerting you of any changes in the frequency of a value. Popular dimensions in this case include device_type, campaign, and browser.

models:

- name: order_sizes

tests:

- elementary.dimension_anomalies:

timestamp_column: purchased_at

dimensions:

- device_type

- browser

- campaign

How anomaly detection tests in Elementary work

Anomaly detection tests are available out-of-the-box when you download the Elementary dbt package in your dbt project. Because Elementary is a dbt package, you can utilize your existing dbt workflow and have your tests live alongside any data updates.

3 Elements of anomaly detection

3 things are needed with every anomaly detection test: a training set, a detection set, and an algorithm.

.avif)

Training set

Believe it or not, training and detection sets are the most important parts of any anomaly detection test. To detect true anomalies you need to ensure you have a large enough training set.

You can think of your training set as data points collected on the columns you decide to test. To test the column you need 2 parameters: timestamp_column and time_bucket.

timestamp_column is the parameter used to slice the data. It is always a timestamp field representing when your data was added to the table.

time_bucket is the parameter that determines the frequency at which to slice the data. This should always be equal to the frequency that your data updates.

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: day

count: 1

You also need to specify a training_period (although this is optional in Elementary as there is a default). The training period determines the length of the look-back window, telling Elementary what the norms of the data are. Keep in mind that this look-back window is always moving and the smaller the look-back window, the more sensitive your data will be to anomaly detection.

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: day

count: 1

training_period:

period: week

count: 3

Here we added purchased_at as the timestamp_column, as this represents when an order was created. With this configuration, the data is sliced every day to find the typical volume of data being ingested into the table. Once the test collects three weeks’ worth of data points, it can begin detecting anomalies.

Detection set

The detection set specifies the look-back window for Elementary to detect any anomalies as compared to the training set. This is specified in Elementary using the detection_period parameter.

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: day

count: 1

training_period:

period: week

count: 3

detection_period:

period: month

count: 1

Similar to the training period, you can choose to configure this parameter yourself or use the default of 2 days. Here, we will continually look back one month to see if any anomalies have occurred in the last month compared to the training period.

Algorithm

To detect anomalies, Elementary uses the z-score, or the number of standard deviations an individual data point differs from the mean of a dataset. The expected (or default) range is within 3 standard deviations and everything outside of this is considered a spike or drop anomaly, depending on the direction of the z-score. How Elementary uses this can then be configured with different parameters to fit your use cases.

The sensitivity to the z-score can be adjusted using a parameter called anomaly_sensitivity. For example, setting this to detect anomalies outside of 2 standard deviations from the mean would look like this:

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: day

count: 1

training_period:

period: week

count: 3

detection_period:

period: month

count: 1

anomaly_sensitivity: 2

You can also configure the direction of the anomaly in case you only wish to be alerted for spikes or drops, rather than both. If we want to only be alerted of drops, for example, we would configure a parameter called anomaly_direction like so:

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: day

count: 1

training_period:

period: week

count: 3

detection_period:

period: month

count: 1

anomaly_sensitivity: 2

anomaly_direction: drop

In the case of seasonal changes, a parameter called seasonality allows you to specify that you want your data slices to be compared to other data slices of that same season. This will prevent Sundays from being compared to Wednesdays for example.

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: day

count: 1

training_period:

period: week

count: 3

detection_period:

period: month

count: 1

anomaly_sensitivity: 2

anomaly_direction: drop

seasonality: day_of_week

Now, using day_of_week, we can ensure Sundays are compared to Sundays of the previous week, Mondays are compared to Mondays of the previous week, etc.

How metrics are stored and generated

When an anomaly detection test is run, Elementary then creates tables that store the metric calculations within your dbt data warehouse. This helps to keep track of how metrics have changed over time, powering the ability to detect trends within the data.

Two tables are created:

data_monitoring_metrics is an incremental model that stores historical records of the metrics generated by Elementary tests. This table is essential in anomaly detection trends as it queries the historical metrics and compares it to the current ones.

metrics_anomaly_score is a view that sits on top of data_monitoring_metrics, running the same query that is used to calculate anomaly detection scores within Elementary. This is helpful for seeing into the results of your anomaly detection tests.

A few key fields that it generates:

training_start- This looks at the time bucket that’s created from the parameters specified and chooses the first timestamp value from the inputtedtimestamp_column.- This field’s values depend on the

training_periodset in your test. When you first create your test, this will be the first day your test is active until the test has been running the duration of yourtraining_period. Eventually, this field’s value will change so that the time between thetraining_startandtraining_endmatches thetraining_period. training_end- This looks at the time bucket that’s created from the parameters specified and chooses the last timestamp value from the inputtedtimestamp_column.- This field’s value will always be the most recent time bucket. For example, if your

time_buckethas aperiodof one day, this will be the current or last day depending on when the test is run. training_set_sizecounts the number of times your specified metric was calculated, or the number of rows available with metric calculation data.- This value will be equivalent to your current

training_perioddivided by thetime_bucket. For example, if yourtraining_periodis one week and yourtime_bucketis one day, then yourtraining_set_sizewould be seven. - Note that this will be less when first creating your test as the entire

training_periodhas not run its course. training_avgis the average metric value across the number of records specified bytraining_set_size.- This utilizes the value of your metric in each bucket to then calculate the average. This is a key component in the formula for calculating the anomaly detection score!

These tables can be helpful to explore to understand more about the trends you are seeing in your data. If you aren’t sure why a metric is being calculated the way it is, this is the first place I would look.

Best practices for configuring your first test

When setting up your first anomaly detection test in Elementary, there are a few steps we recommend to ensure your tests work as expected. Following these will give you the confidence you need to closely monitor your data quality.

#1: Start with timestamp_column and time_bucket

This is the most important step for accurate detection and getting immediate value from anomaly detection tests. For the timestamp_column, you want to choose the field that represents when the data was created or inserted into your table.

The period and count values in the time_bucket parameter should match the frequency that your data updates. For example, if your data updates every hour, then you would set this to be 1 hour.

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: hour

count: 1

Here we added purchased_at as the timestamp_column, as this represents when an order was created. With this configuration, the data is sliced every day to find the typical volume of data being ingested into the table.

Now your first anomaly test is officially set up! Because the other parameters have default values, you can begin detecting anomalies without configuring anything else.

#2: Set the test severity to warn and configure a tag to run your test

Tests built into dbt and in the Elementary package can be configured with test severity. This is great to use when first building out a test, or when the test is not a breaking error. Setting it to warn will prevent downstream data sources from failing to run when the test fails.

With doing this, you can also configure a tag in dbt that you can then use to run your test. These two steps will allow you to iron out any kinks in your tests before the tests are integrated into your production pipeline.

models:

- name: order_sizes

tests:

- elementary.volume_anomalies:

timestamp_column: purchased_at

time_bucket:

period: hour

count: 1

config:

severity: warn

tags: ['elementary']

With a tag called elementary defined, you can then run the test like so:

dbt test --select tag:elementary

This will only run the Elementary test defined with this tag rather than all of your dbt tests.

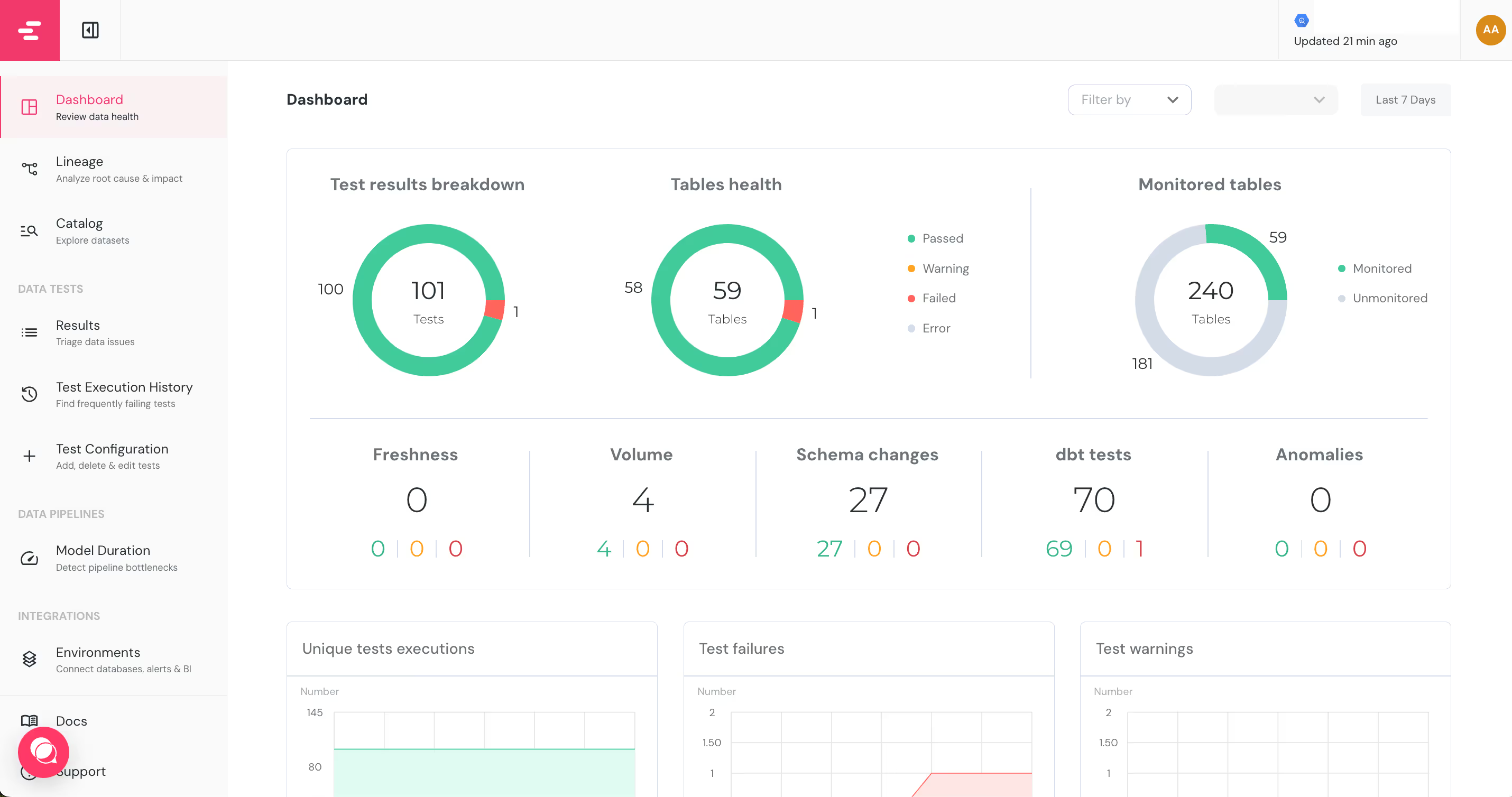

#3: Use Elementary UI to visualize the results

.avif)

After running the test with the tag you configured in the previous step, check out Elementary’s UI to see if the test is working as expected.

To do this, first install the Elementary CLI.

pip install elementary-data

You will also need to install the adapter specific to the data platform you are running Elementary with:

pip install 'elementary-data[snowflake]'

pip install 'elementary-data[bigquery]'

pip install 'elementary-data[redshift]'

pip install 'elementary-data[databricks]'

pip install 'elementary-data[athena]'

pip install 'elementary-data[trino]'

## Postgres doesn't require this step

Next, run the following command:

edr report

This will use the connection profile you have set up to access your data warehouse, read from Elementary’s generated tables, and generate an HTML file that you can then open in a web browser.

This will use the connection profile you have set up to access your data warehouse, read from Elementary’s generated tables, and generate an HTML file that you can then open in a web browser.

When looking at the visualizations of your test results, don’t forget to ask the following questions:

- Do the time buckets make sense?

- Are there clear seasonality patterns

- Is the expected range too wide or narrow?

These will all allow you to think about your data strategically and adjust the parameters as needed. We recommend continuously changing the parameters and running the test until you get it just right.

#4: Run the test for a few days to increase confidence

Once you’ve reiterated the test and have a result you are happy with, you’ll want to run the test for a few days with the warn severity. This will ensure the test works smoothly before you change the severity and it has the power to fail your data pipeline.

#5: Change severity to error

Once you are confident in the test’s configuration, you can remove the warn severity. The default of any test is to error out, so you don’t need to specify this. By having this as the default, your tests will fail and prevent downstream dependencies from running.

#6 Continue to build out tests

Now that you’ve successfully built out your first anomaly detection test in Elementary, you can continue to build more! We recommend following these steps for every new test you add to your dbt project until you get a grasp of how Elementary works.

Don’t be afraid to test out different anomalies like freshness, column, and dimension anomalies.

Using the Elementary Cloud Platform

Elementary also offers a full Observability platform. With Elementary Cloud, you do not need to worry about generating the visualizations locally but instead can see them dynamically on your browser.

Elementary Cloud offers features such as automated anomaly detection, data health scores, column-level lineage, incident management, and a data catalog.

If you have multiple people on your data team monitoring tests and overall data quality, it may be worth thinking about Elementary Cloud.

In Cloud, you will see reports on your tests and the overall health of your data environment in an easy-to-navigate platform.

Conclusion

Anomaly detection tests complement other tests, both generic and from other packages, that are already built out in your dbt project. Unlike these other tests, they add the ability to check for trends in your data, creating a comprehensive testing environment.

Applying anomaly detection tests requires an understanding of the three main components- a training set, a detection set, and an algorithm. Elementary makes it easy to customize these three things, offering a wide range of parameters on its various types of anomaly detection tests.

By tweaking these parameters, you can perfectly visualize how your data observability will improve from these tests. Elementary’s anomaly detection tests give you the confidence you need to ensure data loss, spikes in null values, and even changes in distribution won’t go undetected.

.svg)