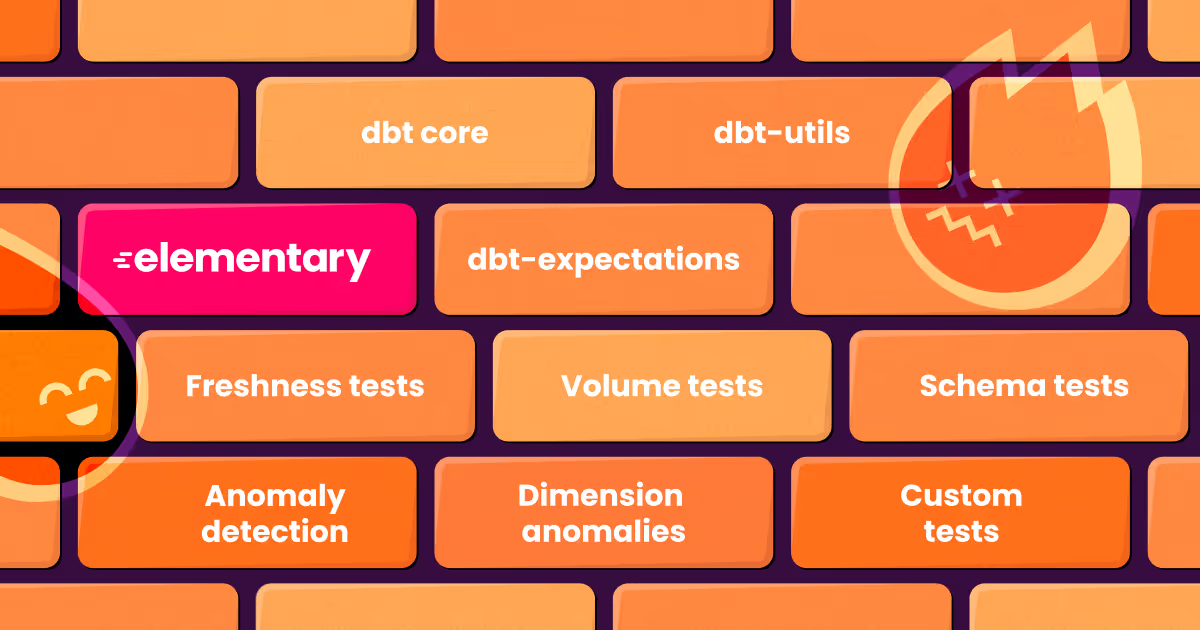

One of the greatest advantages organizations get from adopting dbt is the built-in testing functionality. Simple yml config, and you can test your data with a simple command. Need advanced testing? Write custom tests to your use case, or leverage packages to extend your testing suite.

Sounds simple.

However, building a reliable testing suite is not.

dbt tests - The more the merrier?

In many of the conversations we had with dbt users, we heard about two types of testing gaps:

- We have almost no tests

- We have too many tests

The first has a clear solution, as cumbersome as it might be. But the second is a gap just as big. Teams spend time and effort in adding tests. They end up with a Slack channel that one user described to us as an “alerts graveyard”.

If there are more alerts than you can handle, you get used to ignoring them. Testing becomes worthless. In this post from Lawerence Jones at Incident.io, he describes the actual price you pay - your real problems are lost in the noise.

![A two-panel meme featuring Squidward from "SpongeBob SquarePants." In the first panel, Squidward looks sad while kneeling beside a gravestone. The second panel shows a close-up of the gravestone, which displays multiple lines of "[FAIL]" in red text](https://cdn.prod.website-files.com/6600900f6b80d2b70b387dc4/66009112b967491a9d130491_64be8159948e00fd09fe864f_634e6f4cb5986e437b813078_alerts_graveyard.avif)

How to avoid dbt tests “alerts graveyard”?

Whether you already experience alert fatigue in your dbt testing, or you simply want to actively prevent this situation, the action you should take is to monitor your test results over time.

This data should enable you to identify:

- Which tests tend to fail - There are probably a few flaky tests that cause most of the noise.

- Which tests are redundant - These are tests that practically test the same thing, and will always fail together.

After you detect these noise factors you can take actions like change tests severity, adjust thresholds (checkout error_if), add conditions or even remove tests. This can be a bit intimidating, as we think of tests as our safety guards. But this is actually the safe thing to do - alerts that are ignored anyway cause distraction from the real issues. They actually harm your safety.

Increase trust in your tests

Another common effect of noisy and abundant tests is the lack of clarity on what is actually tested and where are your blindspots. The same data that can help detect noisy tests, can be used to create visibility on test executions and coverage.

As we want to avoid the “graveyard” scenario, don’t jump into adding tests. For each model that lacks coverage ask yourself who would act if it breaks? If its tests don’t actually run? If you don’t have a clear answer, adding tests will result in noise that will be ignored.

Continuous improvement

Reducing noise and blindspots will have a major impact on your ability to trust your tests. This is not a one-time project, it should become part of your dbt project development process. Just like you improve your data modeling over time, invest in improving your testing. It gets easier with time, and it pays off.

We hope you find the Test Runs screen valuable in tackling the challenges mentioned in the post and would love your feedback about it!

.png)