Imagine stepping into a modern office building equipped with cutting-edge fire safety measures—fire alarms, motion sensors, and automatic sprinklers. At first glance, it seems like the perfect system: every possible risk accounted for, no chance of disaster slipping through the cracks.

But there’s a flaw.

Every time someone makes toast, the alarms go off. A single birthday candle triggers the sprinklers. Security personnel are constantly called to check for non-existent fires. Eventually, people stop paying attention. They start silencing the alarms, ignoring the disruptions, and worse—missing real fire hazards when they actually happen.

Now, picture the opposite scenario—a building with no fire alarms at all. A fire could be smoldering undetected for hours before anyone notices. By the time someone smells smoke, it’s too late.

This is exactly what happens when data quality testing and monitoring swings too far in either direction.

The Danger of Under-Testing: Fires You Can’t See

A lack of data quality checks is like having no fire alarms—critical problems go unnoticed until they’ve already caused significant damage. Corrupt or incomplete datasets silently make their way through pipelines, leading to misleading reports, flawed business decisions, and potential financial or reputation harm before anyone realizes something is wrong.

dbt tests are great at catching known issues, such as missing values (not_null) or duplicate keys (unique). But they don’t catch the unknown—unexpected schema changes, anomalies in business metrics, or a sudden surge in missing data. Without proactive anomaly detection, these problems can spread quietly, just like an unnoticed fire burning behind a wall.

Signs You’re Under-Testing

- Relying only on basic dbt tests (

not_null,unique) and assuming they cover all potential problems. - No proactive anomaly detection to identify unknown data issues before they escalate.

- Finding out about data issues only after reports are broken or stakeholders complain.

Without adequate testing, a small data issue can spiral into a much larger crisis—just like an unseen fire spreading through a building.

The Risk of Over-Testing: False Alarms and Wasted Effort

It might seem logical that a higher test coverage equal better reliability, but an over-tested system often has the opposite effect. Too many unnecessary checks create excessive noise, increase maintenance overhead, and provide a false sense of security. Worse, overwhelmed engineers may resort to bypassing or disabling tests just to keep things running—undermining the entire data quality process.

Signs You’re Over-Testing

- Testing redundancy creates noise, not insight: A blanket approach to testing leads to cluttered dashboards filled with low-priority alerts, making it harder to identify critical issues.

- False confidence in test coverage: A bloated test suite can create the illusion that everything is under control, even when key risks are being missed. Over-relying on predefined rules without anomaly detection can leave significant gaps—just like a fire alarm that only detects smoke but misses a gas leak.

- Frequent false positives: When engineers get flooded with non-critical alerts, they start ignoring all alerts—including the important ones.

- Tests blocking legitimate changes: Hardcoded constraints (e.g.,

accepted_values) can break pipelines unnecessarily when new but valid data appears. - Engineers creating workarounds: To cut through the noise, engineers may start bypassing or disabling tests instead of addressing real data quality issues, eroding trust in the testing process.

If every alarm sounds the same—whether it’s a minor glitch or a major issue—eventually, people tune them all out. The same happens with excessive data testing: real problems get buried in the noise.

Finding the Sweet Spot: A Fire Alarm System That Works

Effective data coverage isn’t about testing more—it’s about testing smarter. Just like a well-designed fire alarm system balances sensitivity with reliability, data teams need a structured approach that catches critical issues without overwhelming engineers.

How to Apply This to Data Quality

To create a balanced and effective data testing strategy, consider these best practices:

- Prioritize What Matters: Not all datasets require the same level of scrutiny. Focus on business-critical assets, ensuring high-value data is well-monitored while avoiding unnecessary tests on lower-priority datasets.

- Minimize False Positives: Use anomaly detection and dynamic thresholds instead of static, rule-based checks to reduce alert fatigue and make failures more meaningful.

- Tiered Testing: Apply lightweight checks to less critical data while implementing deeper validation for high-risk pipelines.

- Ensure Clear Ownership: Effective data governance means assigning clear ownership over every test. Each test should have someone responsible for triaging failures, maintaining relevance, and ensuring resolution—preventing outdated or forgotten checks.

- Regularly Audit Your Test Suite: Remove redundant, outdated, or low-value tests to maintain an efficient, actionable testing strategy.

The Bottom Line: Smarter, Not Louder

A building without fire alarms is dangerous—but a building where alarms never stop going off is just as bad. The same applies to data testing.

Instead of blindly increasing test coverage, focus on risk-based, high-impact testing that ensures data quality without excessive overhead. By striking the right balance between focused test coverage and strong governance, data teams can improve trust in their testing process—making sure that when an alert does trigger, it actually matters.

By following this approach, your data testing remains effective and efficient, keeping your pipelines reliable without drowning in noise.

Looking Ahead: Smarter Testing, Powered by AI

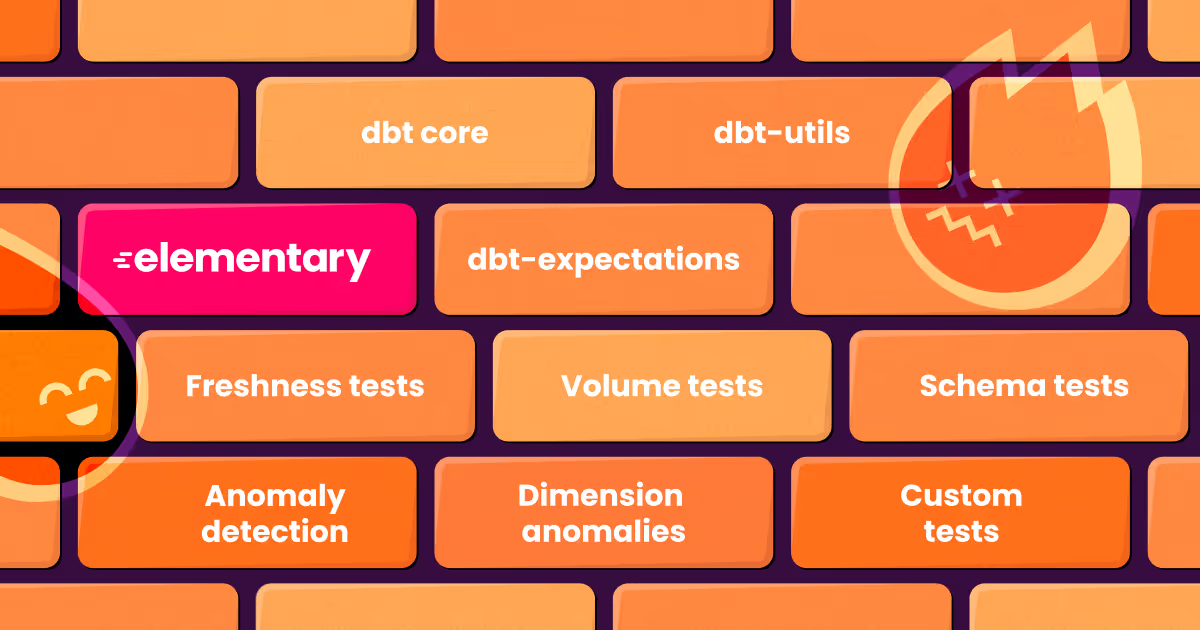

To help teams strike the right balance even more effectively, Elementary built an AI-powered tests coverage engine.

It analyzes your existing tests results, metadata and usage patterns to:

- Suggest where to add test coverage—focusing on areas that may be under-tested and pose real risk.

- Identify redundant or low-value tests—so you can clean up noise and remove tests that no one reacts to.

- Recommend configuration optimizations—like adjusting thresholds to reduce false positives or fine-tuning sensitivity based on real-world outcomes.

It’s all about continuously refining your data quality strategy—ensuring your testing is precise, efficient, and actually meaningful.

If you’re interested and want to learn more about how this feature can help your team, feel free to book a call with us. We’d love to chat!

.avif)