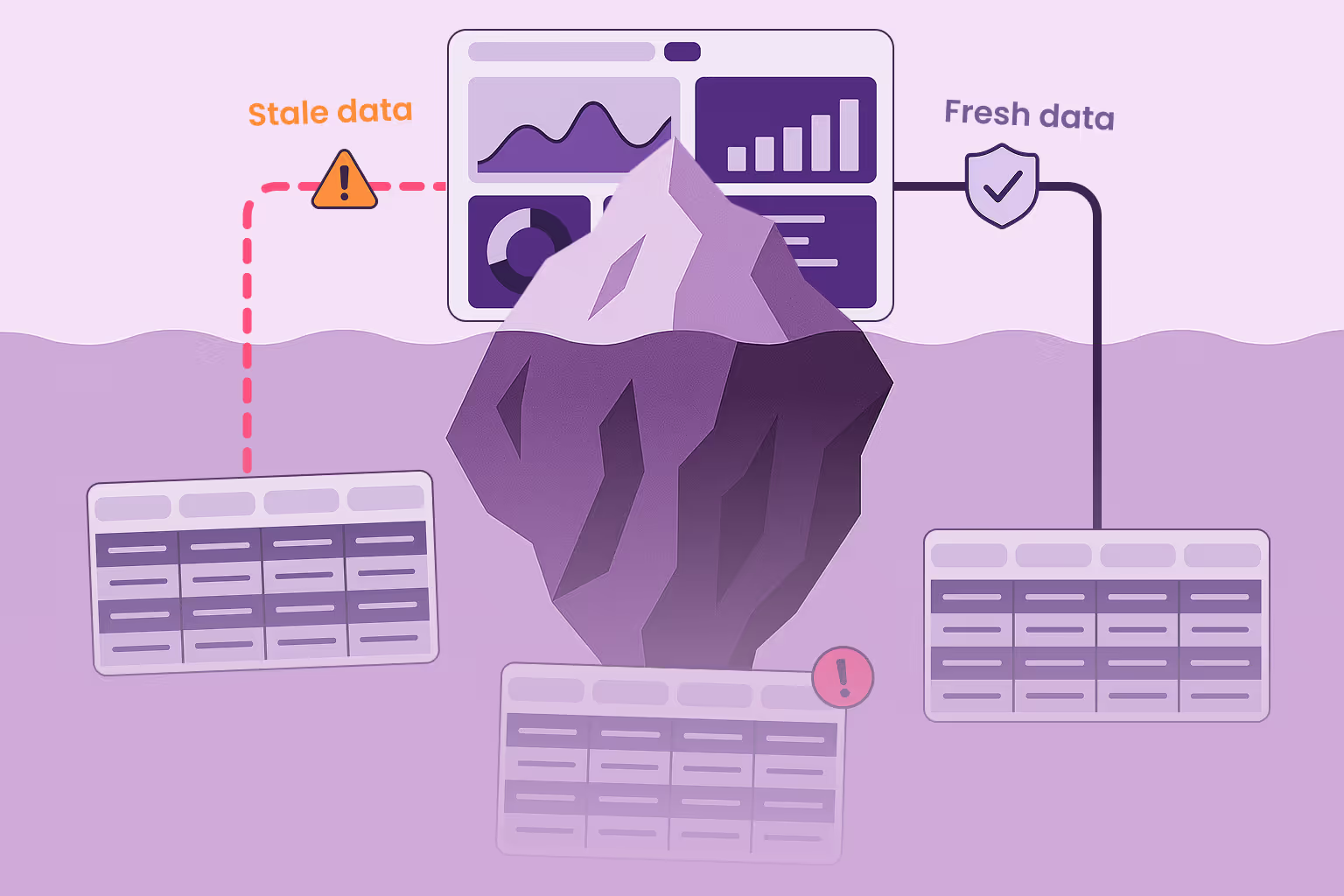

Most data teams understand the basics of data freshness, check the timestamp, confirm recent updates, and monitor pipeline completion. However, experienced data teams know that freshness goes far beyond these surface-level validations.

Basic freshness checks can identify obvious staleness, but they often overlook complex issues like partial freshness degradation, where some data partitions update while others silently fail. Or temporal inconsistency traps arise when downstream consumers work on mixed-freshness datasets, creating analysis blind spots that no timestamp check will reveal.

Have you ever looked into why your seemingly "fresh" dashboard displays different results from a colleague's report that uses the same data? It’s often due to one pulling from a fully updated table while the other accesses a stale partition, or a join combines data with mismatched refresh times, leading to skewed results. Standard monitoring rarely catches these gaps.

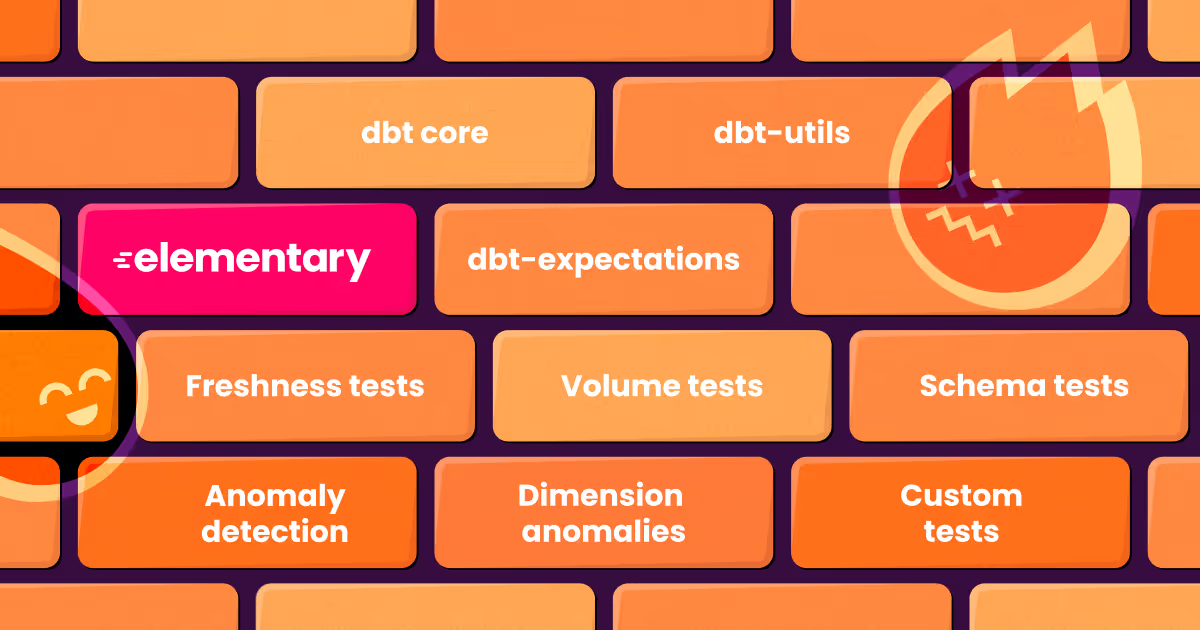

Elementary Data offers advanced tools to address these challenges, providing automated freshness monitors and anomaly detection tests that seamlessly integrate with your dbt projects.

In this article, we will discuss the complexities of data freshness and effective validation strategies. We will also explore how Elementary Data's solutions can enhance the accuracy and reliability of your data.

What is Data Freshness?

Data freshness is how up-to-date and relevant your data is. Fresh data gives you an accurate picture of what’s happening at a given time, while stale data lags, leading to decisions based on outdated information.

Why does data freshness matter?

- Smoother operations: Many business processes depend on real-time data – inventory tracking, fraud detection, and automated workflows. When data is stale, shipments may get delayed, alerts go out too late, and customers get frustrated.

- Better decisions: Imagine making investment choices based on last week’s numbers. That’s a disaster waiting to happen. Fresh data helps businesses act on what’s actually happening right now, not outdated insights.

- Satisfied customers: No one likes getting a discount code for something they already bought or being told an item is in stock when it’s actually sold out. Keeping data fresh means customers get relevant offers, accurate pricing, and a smooth experience.

Think about a fraud detection system in a fintech company. If transaction data isn’t fresh or is delayed by 20-30 minutes, fraudsters have a comfortable window to carry out unauthorized transactions before being detected. By the time the system catches up, the damage is already done, resulting in significant financial losses and eroding trust. Fresh data catches those threats in real time, reducing risks and protecting both businesses and customers.

Key Metrics to Measure Data Freshness

How do you know if your data is fresh? There are a few key ways to measure it:

- Data age (maximum age of data): You can check data freshness by looking at how old the data is. If a dataset hasn’t been updated in hours or days, it might not reflect ground reality anymore. What counts as fresh depends on the use case. For instance, an e-commerce website displaying product availability needs real-time inventory data to avoid overselling, while monthly sales reports can work with data that is a few days old.

- Data decay and relevance: Some data holds value for a long time, while other data quickly becomes outdated. For instance, financial transactions stay relevant for compliance and reporting for months, even years. But stock prices? They’re only useful if they reflect the latest market conditions. Understanding how quickly data loses value helps teams decide when to refresh it.

- Data timeliness: It’s not just about how recent data is but also about whether it arrives when it’s actually needed. This is why data quality timeliness matters: ensuring data isn’t just fresh but available at the time it is required for business decisions. For instance, if a sales team gets customer purchase trends at the end of the quarter instead of midway through, they’ve already missed their chance to act on the insights.

- Data accuracy: A dataset can be up to date but still misleading if it’s full of errors. An incorrect sales report updated five minutes ago is worse than an accurate one from yesterday. Making sure data is both fresh and correct is key to making reliable business decisions.

- Data latency and processing time: Data can be collected and analyzed in real-time. However, processing delays may render such data stale by the time it is available for use. If an e-commerce site takes hours to reflect updates in its inventory system, clients can place orders for products that are actually out of stock. The faster data moves through pipelines, the fresher and more valuable it stays.

By tracking these factors, companies can make sure their data isn’t fresh on paper but actually fresh enough to support smarter, faster decisions.

How to Improve Data Freshness in Your Data Pipelines

Data freshness ensures that the right information reaches the right place exactly when needed. If data arrives late or outdated, it’s as good as useless.

Here’s how to keep it fresh:

- Implement event-driven and real-time pipelines: Instead of waiting for scheduled updates, set up pipelines that react to changes instantly. If a customer updates their billing address, your system should reflect it immediately – not hours later – so invoices don’t go to the wrong place.

- Establish data freshness SLAs: Define clear expectations for how fresh data should be and monitor adherence. For instance, transaction records for a payment platform should be updated within seconds. For a sales report, an hourly refresh might be acceptable. Defining and tracking these targets keeps teams aligned.

- Monitor latency and processing time: If data takes too long to go from source to dashboard, it’s already outdated. Track how long it takes for data to move through ingestion, transformation, and storage so you can spot slowdowns before they cause problems.

- Automate data quality validation: Fresh data isn’t useful if it’s wrong. Automated checks can catch missing, duplicated, or outdated records before they cause headaches. An e-commerce company, for instance, wouldn’t want to show a sold-out product as "in stock" due to lagging inventory updates.

- Ensure pipeline idempotency: Design pipelines so that rerunning them doesn’t duplicate or corrupt data, preventing inconsistencies. Idempotent pipelines prevent errors from creeping in when things don’t go as planned.

Best Practices for Maximizing Data Freshness

Here are some best practices to ensure your data stays ready for use:

1. Establish a data governance framework

It’s easy for outdated or inconsistent data to slip through without clear policies. Set clear rules for managing data. A strong data governance framework defines how often data should be updated, who is responsible for it, and what to do when something looks off.

2. Prioritize critical data sources

Not all data needs to be updated every second. Identify which data sources – like customer transactions, fraud detection, or real-time inventory – need the most frequent updates. Elementary Critical Assets feature that allows you to mark essential data assets, ensuring they receive higher priority in monitoring and alerting. Slower-moving data, like annual reports, can follow a different schedule.

3. Use distributed processing for faster updates

Relying on a single system to process all data can slow things down. Distributed frameworks like Apache Kafka or Spark Streaming allow updates to happen in parallel, keeping things moving efficiently.

4. Ensure data lineage and observability

If you don’t know how data moves through your system, it’s hard to fix problems when something gets stale. Data lineage and observability tools help you trace updates, catch slow-moving data, and make sure everything stays current.

5. Implement real-time monitoring and alerts

Even the best systems can run into delays. Set up automated checks to flag delays and outdated records. When something isn’t updating as expected, alerts can help teams fix issues before they impact business decisions.

Testing Data Freshness with Elementary

Validating data freshness manually is a solid starting point, and Elementary makes it easy in both its open-source (OSS) and Cloud offerings. To test manually using elementary OSS, you can implement the `freshness_anomalies` test within your dbt models. This test monitors a table's `updated_at timestamp` column to detect delays or anomalies in data updates.

Example Configuration:

models:

- name: your_model_name

description: "Model description"

columns:

- name: updated_at

description: "Timestamp of the last update"

tests:

- elementary.freshness_anomalies:

severity: warnIn this setup, the test warns if significant delays or anomalies are detected in the `updated_at column` of `your_model_name`.

While manual freshness testing is effective, scaling these tests in large and complex data environments presents challenges:

- Data volume: Managing and testing vast amounts of data can be time-consuming and resource-intensive.

- Data variety: Diverse data formats and structures require customized testing approaches, increasing complexity.

- Data velocity: High-speed data generation demands real-time testing capabilities to ensure up-to-date information.

- Integration complexities: Coordinating freshness tests across multiple data sources and systems can lead to integration challenges.

These factors may result in delayed anomaly detection and an increased risk of data quality issues. Elementary provides Automated Freshness Monitors to address these challenges.

These monitors use machine learning to detect anomalies in production tables without manual configuration. They analyze metadata from your data warehouse, learning update patterns to identify unexpected delays. Here are some key benefits:

- Zero configuration: Automatically learns data behavior, eliminating the need for manual setup.

- Comprehensive coverage: Monitors all production tables in your dbt project, ensuring broad oversight.

- Resource efficiency: Operates using metadata, minimizing additional compute costs.

Elementary Data sets itself apart by incorporating observability directly into your dbt projects, providing several key benefits:

- Seamless dbt integration: Elementary uses the tools and syntax data engineers are already familiar with, such as dbt tests, tags, and configurations. This integration avoids duplicate work, ensuring a single source of truth in your codebase.

- Automated freshness monitoring: You can automatically collect logs and metadata from your dbt project using the Elementary dbt package.

- Cost-effective operation: Elementary uses metadata, minimizing additional computing costs associated with data freshness monitoring.

Get started with the Elementary OSS dbt package or book a demo to learn about automated monitors for data freshness with the Elementary Cloud Platform.